How We Made Songs and Satire About the US Election with AI Avatars

Who said politics can't be fun, eh‽

Elections tend to follow a well-worn script—not so much in their outcomes, but in how the news media cover them.

There’s the reporter standing in front of the polling station, fizzing with excitement, confirming that, yes, indeed people are voting. There’s the panel of pundits, brows furrowed, spinning theories with the confidence of a weatherman whose forecast is already south-bound. Then comes the inevitable vox pop: the solemn voter explaining they chose Biden because under Kennedy "the country is going to the dogs" and “democracy must prevail”.

And finally, the circus of the grand finale: the victory speech, the concession, and the marathon of over-analyses that stretches on longer than the campaign itself.

But must it be this way? In a world increasingly shaped by artificial intelligence, could election coverage be… different?

That’s the question we explored with Project L, an experiment undertaken by the MA Multimedia Journalism students at Bournemouth University, designed to test how AI might reshape journalism.

For instance, what if political commentary came from AI avatars in short, snappy video bursts—stripped of exaggerated theatrics?

How might that look?

Meet our very own Ram Sengupta—well, his digital avatar, all the way from New Delhi, complete with a Harry Potter-style talking portrait.

Then there’s Alina Dolea, who had plenty to say about the drama unfolding on election night.

They weren’t alone. Darren Lillekker and Einar Thorsen shared sharp insights from Bournemouth, Ahmed Nasralla weighed in from Freetown, and Rajiv Satyal—yes, @funnyIndian, the comedian—brought his unique perspective from Los Angeles.

Curious to see how it all came together? Here’s a How might that look?taster.

Commentary wasn’t the only frontier we explored with Project L. What if the defining moments of an election could be told through music?

That question gave rise to BOPs (Beats of Politics), where news was transformed into songs.

Take our first BOP, for instance. As polls closed in the swing states, Syed Naqi Akhter captured the mood of the moment. Hear it!

And when voting resumed in Georgia, Arizona, and Wisconsin after bomb threats disrupted polling, Nichola Hunter-Warburton coaxed 'an anthem of resilience' out of AI. Later, as Trump pulled ahead of Harris, Nichola joined Syed and Gokul Aanandh Bhoopathy to create a reflective, slow-tempo song. Listen!

Our best work? Without a doubt, the victory and concession speeches. Trump’s victory anthem—bold, brash, and impossible to ignore. Kamala Harris’s concession? An ode to resilience, layered with heartache and hope.

For these two, we went all in and created music videos, using a range of AI platforms guided by human oversight to ensure accuracy and relevance. ChatGPT-4 crafted the lyrics, Leonardo.AI and Stable Diffusion produced the artwork, Suno composed the music, and Runway ML animated the visuals.

What if politics could speak in images?

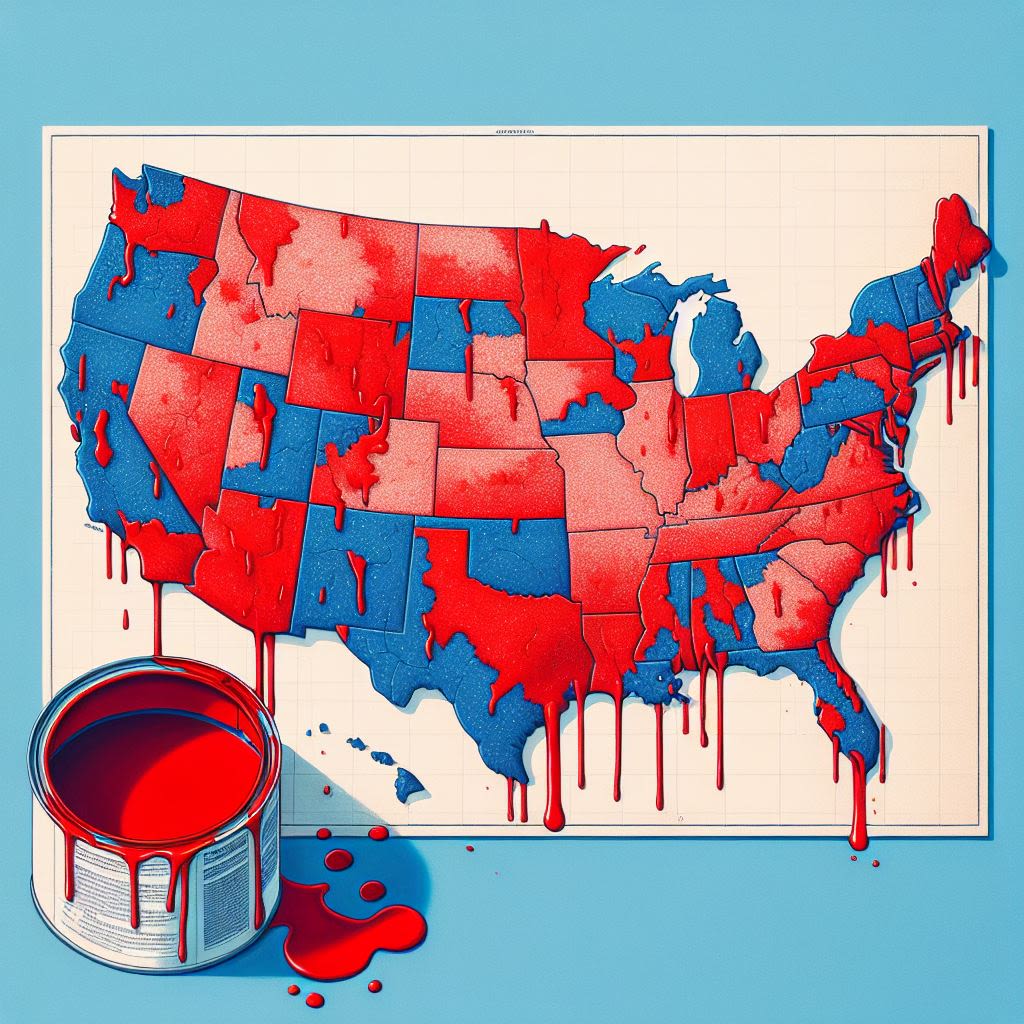

We also wanted to see how AI could reimagine election moments through visuals. What if historical and contemporary events could be brought to life as AI 'artwork'?

That question inspired WIF (What If), where visuals became a storytelling tool.

To create these visuals, we used Dalle-3, Stable Diffusion and Mid Journey. Images were a 'hybrid', a collaboration —AI providing the digital brushstrokes, our journalists refining the outputs with multiple prompts.

Our experiments took us in several directions. We began with an impressionistic take, traveling back to George Washington’s era to reflect on a time when politics and responsibility felt profoundly different (check out the original post and explanatory video for more). From there, we turned to the present: capturing the tension in swing states, the moment Trump took the lead, and even exploring how astronauts cast their votes (yes, they can—and do), using touches of surrealism and pop art, among other styles.

We didn’t set out to task AI with podcasts—but, as the hours wore on, inspiration struck (or maybe it was the caffeine).

With Google LM proving to be a fantastic and surprisingly accurate tool, we thought, why not? And thus, two bonus podcasts were born.

In Election Insight, we tackled two critical topics: the hurdles disabled voters faced in Pennsylvania and the challenges journalists encountered covering the 2024 US election.

And then there’s students abroad. Ever wondered how American students manage to cast their votes while living overseas? Spoiler alert: it’s not easy.

What does this all add up to?

Why go to such lengths? The answer is simple: curiosity—curiosity about how AI might reshape the art of journalistic storytelling.

With AI driving rapid changes across the news industry—from content creation to ethics, newsgathering, and audience engagement—it’s inevitable that storytelling too will evolve. Just as the internet revolutionised how stories were presented—introducing formats like blogs, microblogs, listicles, and multimodal narratives—AI is pushing boundaries, creating new possibilities for telling stories. Tools once reserved for the largest newsrooms are now available to anyone with a creative spark, democratising journalism at an unprecedented pace.

The experiments under Project L—from transforming key moments into music to reimagining history through visuals and creating podcasts—offer a glimpse of what’s to come. In our view, journalists experimenting with AI are grappling with more than just new storytelling methods; they’re confronting a deeper challenge: defining humanity’s role in a narrative landscape increasingly shaped by machines.

Journalism has never been just about delivering information. At its core, it’s about forging connections: telling stories that challenge us, inspire us, and remind us of our shared humanity. In an AI-enhanced world, how will we keep telling the stories that make us human?

Notes on Project L

Project L stands for Project Lumos—because, yes, we’re all Harry Potter fans here. As part of the USA Votes 2024 project at Bournemouth University, Project L was led by MA Multimedia Journalism students who explored how AI might reshape storytelling in journalism.

Where to find us

Outputs, as originally published, are available on Insta @ProjectL.BU and on Mastadon Social @ProjectL

The Project L team

Gokul Aanandh Bhoopathy, Gift Osamwonyi, Hannah Clubley, Isabel Gallagher, Jennifer Chibuobasi, Karan Pratap Singh, Nichola Hunter-Warburton, Syed Naqi Akther, Thanh Hung Nguen, Tom Dinh

Editorial mentors

Chindu Sreedharan, Gloria Khamkar, and Jason Hallett (Bournemouth University); Rajendran P (BMCC, CUNY).

AI platforms we used

Chat GPT, Gemini Pro, Monica; Stable Diffusion, MidJourney, Leonardo; Eleven Labs, Revoicer, Google LM; Runway ML, and Adobe's generative tools.

Get in touch!

Email Chindu at csreedharan@bournemouth.ac.uk

Post script

The video underlying this credit slide captures the Project L team on the fateful night, hard at work. As you can see, we take ourselves very seriously.